Player

represents a media handler that provides the methods to control aspects of

media presentation common to all time-based media types. Its capabilities

include the control of media progression -- start, stop, setting media time etc.

Media-type specific features such as audio, video, MIDI are defined as specialized

Control interfaces that can be fetched from the

Player.

The following defines the specific behavior and implementation requirements for an MMAPI implementation in the context of JSR 272.

1.1 Player Creation

MMAPI Players provide the API to control the presentation of audio and video components. They are created as a result of tuning to a broadcast service with the Service Selection API (see ServiceContext). Creating Players from the javax.microedition.media.Manager directly from URI's referencing the broadcast content is NOT supported. After the Player is created from the Service Selection API, it is in the Realized state so Controls from the Player can be immediately obtained.

JSR 272 makes no assumption on how many Players will be created as a result of tuning to a broadcast. For example, it is conceivable that if the broadcast contains one audio and one video track, one Player will be created to play back the synchronized audio and video. However, if there are more than one video track with their matching synchronized audio tracks, more than one Player may be created to allow flexible placement of the video components and individual control of each Player.

1.2 Media Time

For broadcast content, JSR 272 loosely adopts the notion of "Normal Play Time" which defines the continuous timeline over the duration of the broadcast as the semantics for the Player's media time. As such, the media time of the Player may not begin with 0 and the duration of the content may not be known. In the cases when the duration of the media is not known, Player.getDuration MUST return Player.TIME_UNKNOWN.

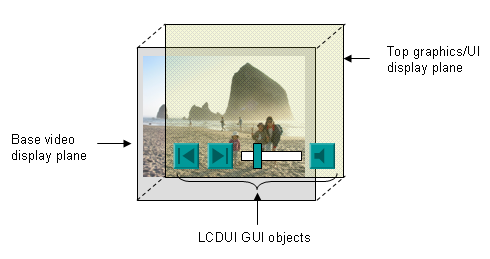

1.3 Video Display and Graphics Overlays

When the system property "microedition.broadcast.supports.overlay" returns "true", graphics overlay MUST be supported as described below.JSR 272 supports overlaying graphics and GUI elements by having the video displayed at base display plane of the graphics subsystem. Any GUI or graphics elements created by the GUI toolkit (e.g. LCDUI) must be displayed on a layer above the video.

For example, if a video Player is initialized in USE_DIRECT_VIDEO

mode to be displayed on an LCDUI canvas

(see presentation example),

any other graphics or GUI components

created in the same area occupied by the video player must be rendered on

top of the video.

Transparency and/or alpha compositing of the graphics component or GUI item can only be supported if there is a well defined way to specify the overlay object's transparency and/or alpha value. For graphics component, if the graphics format itself supports transparency (e.g. GIF) or alpha value (e.g. PNG), then transparency or alpha compositing MUST be supported if the system supports overlay. However, note that for GUI items, MIDP 1 and MIDP 2 do not support specifications of transparency or alpha values.

1.4 Recording

Real-time recording of media content is supported in MMAPI with

javax.microedition.media.control.RecordControl. If an implementation

supports recording and the application has permissions to record the content,

a RecordControl can be fetched from the Player.

Recordings can also be scheduled ahead of time. This is handled by RecordingScheduler and it's associated classes.

Recorded content can be played back using MMAPI by creating a Player

from the URL of the recorded content, as long as the application has permission

(DRM rights) to play the content. If the application does not have playback

permission over the content, the playback will fail according to the MMAPI

specifications.

Recorded content may or may not be superdistributed (shared, exported to

other applications or devices) as specified by the rights objects associated

with the recorded content.

For recordings that are allowed to be superdistributed, an application can

read the raw recorded content using the FileConnection API from

the Generic Connection Framework. For recordings that are not allowed to be

superdistributed, access to the raw data MUST fail according to the

specifications of the FileConnection API.

See the DRM section for more discussions on DRM-related issues.

1.5 Mandatory Controls

The following table outlines the MMAPI controls that are mandatory for a JSR 272 implementation:

| MMAPI Controls | Implementation Requirements |

|---|---|

| ToneControl | Mandatory (per MMAPI requirements) |

| VolumeControl | Mandatory |

| VideoControl | Mandatory if the device supports video playback |

| RecordControl | Mandatory if the device supports recording |

| RateControl | Mandatory if the device supports time-shifted playback |