Touch UI

From Series 40 6th Edition FP1 and S60 5th Edition onwards, the Series 40 and Symbian platforms support touch interaction on mobile devices with a touch screen. The touch screen is sensitive to the user's finger and device stylus, thus replacing or complementing the physical keys of the device as the main means of interaction. The touch UI allows the user to directly manipulate objects on the screen, enabling a more natural interaction with the device.

Touch devices support a variety of design possibilities that cannot be implemented in traditional key-based mobile applications. If a device has a physical keypad and a touch screen, both can be used to interact with applications.

On Series 40 and Symbian devices, touch interaction is supported by all LCDUI elements. On Symbian devices, touch interaction is also supported by all eSWT elements. You can thus create touch UIs using the same UI elements as when creating traditional key-based UIs.

High-level LCDUI elements and eSWT elements use the native touch implementation provided by the device, so you do not need to separately program touch interaction for them. Low-level LCDUI elements, however, do not automatically implement touch functionality, so you need to separately listen for and handle touch events for these elements. In other words, MIDlets that use only high-level LCDUI elements or eSWT elements work on touch devices automatically and do not need to separately handle touch interaction, whereas MIDlets that use low-level LCDUI elements need specialized methods to handle touch events and implement touch functionality.

To find out which devices have a touch screen, see Nokia Developer device specifications and filter devices based on "Touch Screen".

Basic touch actions

The following table describes the basic touch actions and the corresponding touch events or event combinations registered by the touch UI.

Action |

Description |

Example |

Touch events |

|---|---|---|---|

Touch |

The user presses the finger or stylus against the screen. |

touch down |

|

Release |

The user lifts the pressed finger or stylus from the screen. |

touch release |

|

Tap |

The user presses the finger or stylus against the screen for a brief moment and then lifts it from the screen. |

Tap: |

touch down + touch release ("touch down and release") |

Long tap |

The user presses the finger or stylus against the screen and holds it there for a set amount of time. The time-out value depends on the platform. Depending on the object that is long-tapped, this action can also constitute a key repeat. |

Long tap: |

touch down and hold |

Drag Drag and drop |

The user presses the finger or stylus against the screen and then slides it over the screen. This action can be used for scrolling or swiping content, including the whole screen, and dragging and dropping objects. In drag and drop, the user "grabs" an object on the screen by touching it, drags it to a different location on the screen, and then "drops" it by releasing touch. Note: High-level UI elements do not support drag and drop. You must implement drag and drop separately by using low-level UI elements. On Symbian devices, by default, there is a safety area of a few millimeters around the initial press point from which drag events are discarded for half a second. This avoids unnecessary drag events on short precision taps. For more information about this safety area, see section Tap detection. |

Drag: Drag and drop: |

touch down + drag touch down + drag + touch down ("stop") + touch release |

Flick |

The user presses the finger or stylus against the screen, slides it over the screen, and then quickly lifts it from the screen in mid-slide. The user can also slide the finger or stylus off the screen. The content continues scrolling with the appropriate momentum before finally stopping. |

Flick: |

touch down + drag + touch release while dragging |

Pinch Pinch open Pinch close |

To pinch open, the user presses the thumb and a finger (or two fingers) close together against the screen, moves them apart without lifting them from the screen, stops, and releases touch. To pinch close, the user presses the thumb and a finger (or two fingers) a short distance apart against the screen, moves them towards each other without lifting them from the screen, stops, and releases touch. Pinch actions can be used, for example, for zooming in and out of content such as pictures and text. Note: Pinch is supported from Java Runtime 2.0.0 for Series 40 and Java Runtime 2.2 for Symbian onwards. Earlier Series 40 and Symbian touch devices do not support pinch. |

Pinch open: Pinch close: |

2 x touch down + 2 x drag ("pinch") + 2 x touch down ("stop") + 2 x touch release |

Multipoint touch

From Java Runtime 2.0.0 for Series 40 and Java Runtime

2.2 for Symbian onwards, Series 40 and Symbian devices support multipoint

touch events. Series 40 devices support multipoint touch events on Canvas and Canvas-based elements, such

as GameCanvas and FullCanvas, and

on CustomItem. Symbian devices support multipoint

touch events on Canvas and GameCanvas. The maximum number of touch points per event depends on the device.

For more information, see section Implementing multipoint touch functionality for Series

40 and section Multipoint touch for Symbian.

Moving content on the screen

Touch devices allow users to scroll content without using the scroll bar. The content can be scrolled by directly dragging or flicking the content. If the content is flicked, the device applies kinetic momentum to the scroll effect: the content continues scrolling in the direction of the flick with decreasing speed, as though it has physical mass.

In MIDlets, Forms and Lists support direct content scrolling automatically.

For low-level LCDUI elements, direct content scrolling and any scroll

effects must be implemented separately.

The following table shows from which platform release onwards the different scroll effects are supported.

Scroll effect |

Supported since (Series 40) |

Supported since (Symbian) |

|---|---|---|

Drag |

Series 40 6th Edition FP1 |

S60 5th Edition with Java Runtime 1.4 for S60 Note: On S60 5th Edition devices, content can be scrolled by dragging the focus on the screen. |

Flick |

Series 40 6th Edition FP1 |

S60 5th Edition with Java Runtime 1.4 for S60 Note: Early S60 5th Edition devices do not support this effect. |

Text input

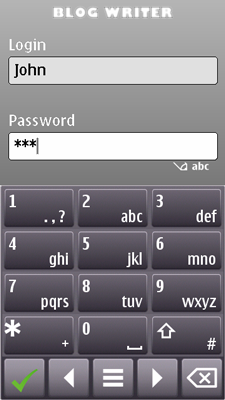

If the user taps a text editor UI element on a touch device with no physical keypad, the virtual keyboard opens. Depending on the device and UI element, the virtual keyboard can open either in split view mode, where the screen is split between the UI element and the virtual keyboard, or in full screen mode, where the virtual keyboard fills the whole screen and the editable text is shown in a text field embedded in the virtual keyboard.

Figure: Text input in split view mode on a Symbian device

If the user taps a text editor UI element on a touch device with a physical keypad, the user provides the input using the physical keypad. No virtual keyboard is opened.

Virtual keyboard on Series 40 devices

The following text editors support split view input in portrait mode:

Interactive

GaugeonFormTextEditoronCanvasTextFieldonForm

In landscape mode, text editors always use full screen input.

In addition, MIDlets can use the Virtual Keyboard

API to launch a virtual keyboard for Canvas and Canvas-based elements, such as GameCanvas and FullCanvas, and for active CustomItem elements in portrait mode.

Virtual keyboard on Symbian devices

The following text editors support split view input:

DateFieldonFormTextEditoronCanvasTextFieldonForm

The following text editors support only full screen input:

TextBoxPop-up

TextBox

For more information about text input on Symbian touch devices, see the following sections in the Nokia Symbian^3 Developer's Library:

More information

For more information about touch interaction on Series 40 and Symbian devices, see:

Designing touch interaction for basic guidelines on designing MIDlets for touch devices

Touch interaction in Series 40 for information about how to create touch UI MIDlets for Series 40 devices

Touch interaction in Symbian for information about how to create touch UI MIDlets for Symbian devices

LCDUI elements in touch interaction for information about how common LCDUI elements behave on touch devices

Tactile feedback for information about tactile feedback support on touch devices

Tap detection for information about suppressing drag events in order to correctly detect taps

Example: Creating a scroller list for an example MIDlet that shows you how to use the Frame Animator API together with the Gesture API

Example: Creating a game using the Gesture API for an example MIDlet that shows you how to use the Gesture API to implement touch interaction in a game application

Example: Using multipoint touch events 1 and Example: Using multipoint touch events 2 for example MIDlets that show you how to use multipoint touch events on Symbian devices

Example: Drawing with Paint for an example MIDlet that shows you how to create a simple paint application.

http://www.developer.nokia.com/Community/Wiki/How_to_enable_Action_Button_1_when_using_Lists_on_Series_40_Full_Touch for information about how to enable Action Button 1 when using Lists on Series 40 Full Touch