Receiving gesture events

To handle gesture events and thereby implement a touch UI, a MIDlet must be able to receive gesture events for its UI elements. The MIDlet must separately set each UI element to receive gesture events.

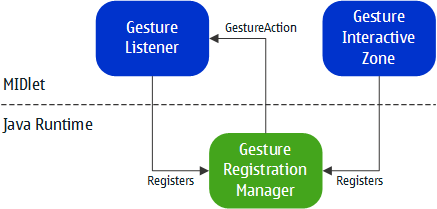

The following figure shows the relationship between the Gesture API classes required for receiving gesture events.

Figure: Relationship between Gesture API classes

Note: The following instructions and code snippets only focus on

the Gesture API. For instructions on creating a full MIDlet, see sections Getting started and MIDlet

lifecycle. For instructions on using the Canvas class in a MIDlet, see section Canvas .

To receive gesture events:

Create the UI element. The element can be either a

CanvasorCustomItem.The following code snippet creates a custom

Canvasclass calledGestureCanvas.// Import the necessary classes import com.nokia.mid.ui.gestures.GestureInteractiveZone; import com.nokia.mid.ui.gestures.GestureRegistrationManager; import javax.microedition.lcdui.Canvas; public class GestureCanvas extends Canvas { public GestureCanvas() { // Register a GestureListener for GestureCanvas // (see step 2 of this example) // Register a GestureInteractiveZone for GestureCanvas // (see step 3 of this example) } // Create the Canvas UI by implementing the paint method and // other necessary methods (see the LCDUI instructions) // ... }Register a

GestureListenerfor the UI element. TheGestureListenernotifies the MIDlet of gesture events associated with the UI element. Each UI element can only have oneGestureListenerregistered for it. However, you can register the sameGestureListenerfor multiple UI elements.The following code snippet registers a custom

GestureListenercalledMyGestureListenerforGestureCanvas.// Create a listener instance MyGestureListener myGestureListener = new MyGestureListener(); // Set the listener to register events for GestureCanvas (this) GestureRegistrationManager.setListener(this, myGestureListener);

For detailed information about the

MyGestureListenerimplementation, see section Handling gesture events.Register a

GestureInteractiveZonefor the UI element. TheGestureInteractiveZonedefines the touchable screen area, a rectangle-shaped interactive zone, from which theGestureListenerreceives gesture events for the UI element. TheGestureInteractiveZonealso defines which types of gesture events the listener receives from this area. Each type corresponds to a Basic touch actions. To specify which gesture events are received, use the following value constants when creating theGestureInteractiveZoneinstance:Table: Supported gesture events Value

Description

GESTURE_ALLReceive all gesture events.

GESTURE_TAPReceive taps.

GESTURE_DOUBLE_TAPReceive double taps.

GESTURE_LONG_PRESSReceive long taps.

GESTURE_LONG_PRESS_REPEATEDReceive repeated long taps.

GESTURE_DRAGReceive drag events.

GESTURE_DROPReceive drop events.

GESTURE_FLICKReceive flicks.

GESTURE_PINCHReceive pinches.

Note: This gesture event is supported from Java Runtime 2.0.0 for Series 40 onwards.

GESTURE_RECOGNITION_STARTReceive gesture recognition start events. A gesture recognition start event represents the initial state of gesture recognition. Touch down always generates a gesture recognition start event, signaling that gesture recognition has started.

Use gesture recognition start events together with gesture recognition end events to keep track of the state of gesture recognition.

Note: You cannot use gesture recognition start events to track individual fingers. To track individual fingers, use the Multipoint Touch API.

Note: This gesture event is supported from Java Runtime 2.0.0 for Series 40 onwards.

GESTURE_RECOGNITION_ENDReceive gesture recognition end events. A gesture recognition end event represents the end state of gesture recognition. Touch release always generates a gesture recognition end event, signaling that gesture recognition has ended.

Use gesture recognition end events together with gesture recognition start events to keep track of the state of gesture recognition.

Note: You cannot use gesture recognition end events to track individual fingers. To track individual fingers, use the Multipoint Touch API.

Note: This gesture event is supported from Java Runtime 2.0.0 for Series 40 onwards.

Note:

GESTURE_DOUBLE_TAPis supported only in Nokia Asha software platform devices.To change the set of gesture events received from the area, use the

setGesturesmethod after you create theGestureInteractiveZoneinstance.By default, the touchable screen area corresponds to the area taken up by the UI element. To define a different area, use the

setRectanglemethod on theGestureInteractiveZoneinstance. The location of the area is defined relative to the upper left corner of the UI element.You can register a specific

GestureInteractiveZonefor only a single UI element. However, a UI element can have multipleGestureInteractiveZonesregistered for it. This is useful, for example, when you create aCanvaswith multipleImagesand want to make eachImagean independent interactive element. In this case, you register multipleGestureInteractiveZonesfor theCanvas, one for eachImage, and set the touchable areas to match the areas taken up by theImages.GestureInteractiveZonescan overlap. TheGestureListenerassociated with each overlapping zone receives all gesture events for that zone.The following code snippet registers a single

GestureInteractiveZoneforGestureCanvas. TheGestureInteractiveZoneis set to receive taps and long taps, and its screen area is set to 40x20 pixels positioned in the upper left corner (0,0) ofGestureCanvas.// Create an interactive zone and set it to receive taps GestureInteractiveZone myGestureZone = new GestureInteractiveZone(GestureInteractiveZone.GESTURE_TAP); // Set the interactive zone to also receive long taps myGestureZone.setGestures(GestureInteractiveZone.GESTURE_LONG_PRESS); // Set the location (relative to the container) and size of the interactive zone: // x=0, y=0, width=40px, height=20px myGestureZone.setRectangle(0, 0, 40, 20); // Register the interactive zone for GestureCanvas (this) GestureRegistrationManager.register(this, myGestureZone);

Now that you have created the UI element and set it to receive

gesture events, define how it handles the gesture events by implementing the GestureListener class.